FEATURE

Ready Librarian One: How Extended Reality Technology Can Enhance Libraries

by Chad Mairn

Most readers will realize that the title of this article is a reference to the dystopian novel Ready Player One by Ernest Cline. However, the content that follows will not highlight the suffering or injustice found in this and other similar novels. Instead, it will demonstrate the many possibilities that extended reality has to offer libraries and, more broadly speaking, our society. What Is XR?

Extended reality (XR) is a developing term for when physical things located in the real world start to blend and interact with digital things inside a simulated world. XR technologies are within the spectrum of immersive technologies, which can give users a sense of presence—a feeling that they are there—by merging physical items with digital items and vice versa. Interestingly, XR is evolving from a place that was once considered science fiction to a more realistic embodied internet in which users can enter the web and interact with and create digital content while collaborating with 3D representations of actual users (avatars).

In his keynote address at the Microsoft Ignite 2021 conference, Satya Nadella (chairman and CEO of Microsoft) discussed the metaverse and how the physical and virtual worlds come together. He said that “the metaverse enables us to embed computing into the real world and to embed the real world into computing, bringing real presence to any digital space. For years, we’ve talked about creating this digital representation of the world. But now we actually have the opportunity to go into that world and participate in it.” He continued, “It’s no longer just looking at a camera view of a factory floor. You can be on the floor. … It’s no longer just playing a game with friends. You can be in the game with them.” This is an exciting reality that is beginning to gain traction in our society. Once the metaverse matures, it will be like the 1990s, when people could start texting anyone no matter what cellphone they used and/or what their network provider was. Text messaging is now ubiquitous and device agnostic; the metaverse will be too.

Going further, the “X” in XR is a variable that right now includes 360-degree imagery, 360-degree VR, true VR, AR, mixed reality (MR), spatial computing, holograms, and whatever else may be coming over our digital horizon in the future. The 360-degree imagery and 360-degree VR provide 3 degrees of freedom (3DoF), in which the user is stationary and cannot move through 3D space. Users can look left and right (yaw, y-axis), look up and down (pitch, x-axis), and pivot their head from left and right (roll, z-axis). True VR provides 6 degrees of freedom (6DoF), in which the user is not stationary, and they can move through 3D space. In addition to yaw, pitch, and roll, users can now move left to right (sway, x-axis), up and down (heave, y-axis), and forward and backward (surge, z-axis). 6DoF, when coupled with stereoscopic and spatial audio, will provide a more realistic experience and a sense of presence while inside VR.

AR works with a camera-equipped device with AR software installed to provide digital content that is overlaid on one’s physical space. Unlike VR, in which users are isolated in a simulated 3D environment, AR users can see their world with digital objects mingling with it. There is a lot of innovation happening with XR, and in the future, users will be able to move from VR to AR environments and back without any obstacles; it will be seamless. AR head-mounted displays (HMDs) can identify who is speaking and will have the power to translate languages, voice tones, accents, and pitch, with conversations appearing as closed-captioned text happening in real time during conversations. This technology will become an invaluable tool for accessibility and other means. MR is when virtual and real worlds can see and interact with one another. Microsoft’s now discontinued HoloLens was used for MR applications. Spatial computing is similar to MR, but it appears to modify physical reality and not just interact with it. Magic Leap uses this term.

What Is the Metaverse?

Web 1.0 gave us the internet. Web 2.0 gave us mobile and social connectivity. Web 3.0 is giving us a decentralized internet to include the metaverse. The metaverse essentially is a 3D digital copy of our physical world in which we can connect and interact with other avatars inside realistic virtual spaces, removing time and place constraints. The term “metaverse” was coined by the writer Neal Stephenson in his 1992 novel Snow Crash. The metaverse concept is not considered science fiction anymore; in fact, one cannot watch financial news programming on television without hearing about metaverse investments at some point.

Think of the metaverse as an embodied internet, a social domain in which people can brainstorm, communicate, and interact with other avatars in simulated spaces while feeling more present in the moment. Remote work and virtual collaboration tools such as Horizon Workrooms, Spatial, Engage, and Immersed can help combat Zoom fatigue by providing a more realistic meeting experience. Although it is a more immersive experience when using VR/AR HMDs, most of the aforementioned collaborative applications also work on mobile devices and computers, so more people can gain access to these innovative services. It has been noted that people tend to be more focused and productive while working inside VR because they are isolated within a virtual boundary and cannot easily be tempted to check their emails and do other work, as can happen when using traditional videoconferencing software.

In Facebook’s early years, its platform allowed users to share experiences using text; then it moved to photographs. Now, society is creating, consuming, and sharing more realistic experiences using video via Instagram reels, TikTok videos, and other video-sharing platforms. As a result, Facebook changed its company name to Meta on Oct. 28, 2021, because it believed that the next phase for society will be sharing more immersive experiences on its social media platform. Mark Zuckerberg, Meta’s CEO, says that by the end of 2030, close to 1 billion people will be in the metaverse, buying digital goods and content to express themselves while working remotely in a virtual office setting (Novet). As a result, it is a good idea for librarians to at least be familiar with the technology to understand its full potential and to help others gain access to it.

For the metaverse to be a truly “meta” experience, users will need to be able to traverse through various platforms without issues. The metaverse must not be developed by one massive company. If that is the case, society will have a Google metaverse, a Meta metaverse, an Apple metaverse, a Microsoft metaverse, an Amazon metaverse, and on and on. It will not be a decentralized internet. Again, being able to navigate through a multitude of platforms is what makes the metaverse concept more relevant and realistic.

Today, when someone quits a game, the wearables or other digital assets that were purchased stay in that game. Imagine, however, if someone could sell or transfer what was purchased in that game to another game. This changes things because spending now becomes investing. There are game designers who are building cryptocurrency exchanges and wallets into their games so that, eventually, those assets stay with the user no matter where they take them (similar to someone carrying a wallet to the grocery store and then to their local tavern). To remain current regarding interoperability standards for an open metaverse, visit the Metaverse Standards Forum at metaverse-standards.org.

Collaborative applications do reduce travel and increase efficiency with regards to productivity and learning, but there has been some discussion that the metaverse could render cities obsolete. That notion seems a bit far-fetched, primarily since XR technologies can help enhance our physical places, not replace them. Going further, Meta is planning to open physical stores to sell its AR/VR devices to access the metaverse. Similarly, libraries have been offering VR/AR experiences for free for their users for many years while providing exposure, usually for the first time, to XR and other emerging technologies. Libraries act as portals to the metaverse and are showing people the future of remote working capabilities.

How Will Learning Be Impacted?

The metaverse could become the next course management system in which the real world and the simulated world become the user interface. Coursework can be accessed anywhere and at any time and will positively impact learning outcomes. The “ability to virtually engage in (and repeat) physical tasks while immersing oneself in virtual or real-time augmented environments will add a deep dimension to the physical or virtual classroom, far beyond mere textbook narratives, illustrations and videos” (Schroeder). As a result, XR technologies are starting to complement traditional education practices by immersing users into a space that is reflective of their physical world, in which students can now make the invisible visible, touch the untouchable, safely experience dangerous and forbidden places, and much more. People can now learn on a completely new level while engaging with others, with no time or place restrictions.

Many libraries, K–12 schools, and higher education institutions are using XR technologies to help enhance learning. Students in Full Sail University’s simulation and visualization program are learning how to create XR experiences, but they are also learning inside XR, where they have access to HMDs from a variety of manufacturers (e.g., Oculus, Magic Leap, Microsoft, HTC). One of the courses students take is linear algebra, and what better way to learn a complex math subject than via an XR application in which they can better understand the way objects move and interact in various computer applications. It makes sense to learn about something that is in 3D in an actual 3D space rather than trying to visualize the concepts in 2D.

The Center for Immersive Experiences is part of Penn State University’s Institute for Computational and Data Sciences, and it is supported by teaching and learning with technology and the university’s libraries. The goal is to transform education and help drive digital innovation using XR. The Optima Classical Academy in Naples, Fla., is the first charter school to have its entire classroom and curriculum inside VR instead of using Zoom and other videoconferencing applications. As mentioned previously, being inside an isolated VR space with other student avatars helps with concentration since it is not easy to do other work. The University of Central Florida’s College of Health Professions and Sciences is using hologram technology in its programs to show students different pathologies in realistic 3D form. Keep an eye out for more educational institutions creating digital twin campuses to form what some are calling “metaversities.” This was tried many years ago in Second Life, but the technology was not ready. However, the technology is certainly maturing today.

There are a growing number of educational tools that are utilizing XR to help enhance learning. AnatomyX, an AR anatomy lab and learning platform, gives students learning experiences that they will not forget. Students can interact with various segments of anatomy, pull them apart, and utilize eye-tracking technology to label the parts, while gazing at them and ultimately seeing how the parts connect to other body systems. Co-location allows multiple people with different HMDs to interact with the same 3D model in their physical space. This is amazing technology that integrates into course management systems and has embedded assessments, note-taking features, narration, voice and gesture controls, and more. AR is a great fit to include in educational situations since many students are experiential learners and simply learn better by doing tasks

instead of reading about them.

How Can Libraries Use XR?

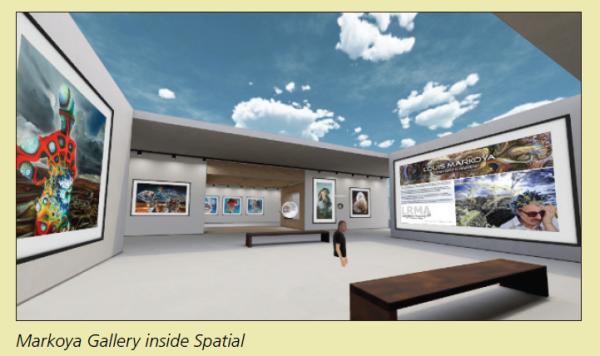

All libraries can use XR technologies to help improve their resources and services with collaborative applications to connect with others inside simulated spaces. St. Petersburg College’s Innovation Lab, which is housed inside one of the school’s joint-use libraries, has been beta testing Spatial since September 2019. Spatial started as a collaborative AR application, allowing people to 3D-scan their faces to get realistic 3D representations of themselves to mingle with holographic avatars from all over the world inside one space. Spatial users can have conversations, present topics on multiple large screens, import and interact with 3D objects, watch videos, listen to music, connect to a variety of storage systems such as Google Drive, add voice and text notes, search the internet, capture photos and video, sketch in 3D, and more. It is more realistic than 2D meeting platforms such as Zoom and Microsoft Teams; in fact, it is almost like being physically in the same room with someone, but it is all done virtually. In 2020, Spatial decided to offer both AR and VR applications that worked on major HMDs such as Meta’s Quest, HoloLens, Magic Leap, and others as well as on mobile devices and desktop computers, allowing more people to use the technology.

The Innovation Lab has used Spatial to construct mock-ups of outer space, create art galleries, interact with avatar journalists, dissect 3D models of frogs in virtual science labs, experience forbidden places such as Chichén Itzá, and much more.

Applications such as Spatial, Horizon, Immersed, and so on have enormous potential for future collaboration and learning, especially in a world that has been impacted by the COVID-19 pandemic. Imagine creating a virtual makerspace by 3D-scanning the room with all of the equipment present to give people living far away or who cannot drive to your library a chance to interact with your realistic space and connect with like-minded individuals.

Scan this code to interact with a 3D object embedded in

a document, and view the AR object in your physical space.

|

Students have been writing research papers for centuries and, in many cases, have had to describe a 3D object (e.g., a sculpture) and include a captioned 2D image, just like in this article. Now, a student or a researcher can add a 3D object that can be embedded into a document or a webpage to include an AR object that can be seen in one’s physical space by using the “model viewer” web component. That way, the audience can see what the real dimensions of the object are instead of seeing it in 2D as an image in a paper. Shadow intensity, camera controls, animations, and other functions can also be included. To learn more about this technology visit modelviewer.dev.

In the past, many libraries utilized Google’s Indoor Mapping service to provide detailed floor plans that automatically appeared when a user zoomed in on a building with their smartphone. So, for example, if a library visitor needs to find the science reference desk or a computer lab, the Indoor Mapping application will give them point-by-point navigation to that specific location. Google Maps now includes an AR option that overlays directional data in real time. We are starting to see libraries capturing their spaces in 3D that can later be used to tour their building. Users can enter the embodied internet and be immersed in that space. These tours can be experienced on a mobile device or a computer or via a more immersive experience using XR. Applications such as Matterport are helping users “transform any space into a dimensionally-accurate digital twin,” in which a user can now explore in VR many places that were digitized using the tool. One beautiful example is the Roosevelt Library, which was built in 1929 in San Antonio. To experience this historic library in VR or to learn more, visit the Matterport website at matterport.com and search for Roosevelt Library.

The Innovation Lab has a few Theta 360-degree cameras that students and faculty members can borrow to create immersive experiences. The camera’s image resolution is 5376x2688 pixels, and video can be captured in 4K resolution. There is also an option for livestreaming in 360

degrees and in high resolution. Instead of assigning a traditional research paper, a teacher in a St. Petersburg College English composition class asks students to find an interesting space to capture in 360 degrees. The students then use RoundMe to annotate, add audio commentary, and create portals, hotspots, maps, and welcome screens for their 360-degree images. Part of this assignment is for St. Petersburg College students to work with students in the U.K. to compare and contrast the places that they captured; it is a great conversation starter. Several pop-up art galleries across St. Petersburg College’s multiple campuses and other museums have used the Innovation Lab’s cameras to create 360-degree experiences to post on their websites. To see the technology in action, visit roundme.com

What Will the Future Bring?

There is a transition happening now in which smartphones are being supplemented and, in some cases, replaced by smart wearable technologies such as smartwatches, smart jewelry, fitness trackers, implantables (i.e., users swallow pills with sensors that can monitor various health metrics), HMDs, and smart clothing (Bösch). Having these computing interfaces sensing motion, recording, and displaying information while providing real-time location information that can be superimposed over physical reality will change how humans interact with the physical world and within the metaverse. Controlling these devices by voice input, gestures, touch, and eventually via brainwave technology will certainly make traversing both the digital and physical worlds easier and more compelling. Companies such as Dent Technology (dentreality.com) are adding digital layers to physical places to place overlays of information on anything that humans would like to learn more about while traveling through these physical places. As mentioned previously, libraries have been mapping their internal buildings using Google mapping technology, so the next phase is to add valuable information and to provide accessibility options to overlay on a library’s physical place.

Buildings are becoming smarter by adding digital intelligence to existing city systems, making it possible to connect applications and put real-time information into the hands of users to help them make better choices (McKinsey Global Institute). Magic Leap, a spatial computing company with billions of dollars in investments, constructed a Magicverse concept a few years ago that it believes will bridge the physical and the digital. The company has a vision of an AR cloud providing this functionality as an open platform that is XR-compliant and works on any device. With XR-compliant devices, people will be able to control mechanical switches and motors or to virtually paint a city’s wall over the air. The Magicverse may one day become a reality and could erode space and time boundaries, allowing for meaningful collaborative work to occur in new, innovative ways.

Imagine what filmmakers, musicians, video game developers, et al., are going to do with this technology. The city of St. Petersburg, Fla., has several murals, some of which are 3D. One of these days, people will be able to walk inside a mural to enter a different world on the city’s streets. Computer vision technologies such as Google Lens, which is a visual search engine for seeing, understanding, and augmenting the real world, will be programmed into applications to help users navigate smart cities and the metaverse, both of which will be seemingly merged into one consistent environment in the future. Lens, which can be used on Android and iPhones, can power an AR version of Street View, highlighting notable locations and landmarks with visual overlays. Google Translate accomplishes real-time translation and can immediately turn a French street sign or restaurant menu into any language by using one’s camera lens.

The field of view (FoV) in a user’s HMD dictates how much of the world they can see around them. The average human can see approximately 220 degrees of surrounding content. A VR headset today can handle approximately 180 degrees. Conversely, AR HMDs such as Magic Leap have approximately a 65-degree FoV. Today, HMDs cannot accommodate one’s full natural FoV, but the technology is quickly evolving. There are rumors that when Meta’s new Quest VR headset comes out, probably in late 2022 or early 2023, it will offer 4K resolution, have outward-facing cameras enabling MR experiences, possess the capability to allow an avatar to mirror their actual facial expressions, and provide color pass-through, which is a camera view of one’s real world when they step outside of their VR boundary. Sony’s PSVR 2 is rumored to offer 4K HDR OLED panels and a 2000x2040 per eye resolution. Magic Leap 2 is slated to come out in late 2022, but it is not being sold as a consumer HMD and will be used mostly in enterprise situations. Microsoft recently announced that it no longer plans to support its third-generation HoloLens MR headset. There are many other HMDs on the market, and more are being developed. There is a new Qualcomm XR2 version 2 chipset coming out in early 2023, which would make Quest and other XR HMDs up to 30% faster, rendering virtual experiences that are even more robust and realistic.

Inside a 3D Art Gallery Inside a 3D Art Gallery

In 2021, author Chad Mairn, a St. Petersburg College librarian and founder of the school’s Innovation Lab, was involved in the first ever interview inside VR for FOX 13 News, using Spatial. In early 2022, Louis Markoya, a protege of surrealist Salvador Dalí, was interviewed inside VR, again using Spatial. The interview with Mairn also included the Leepa-Rattner Museum of Art curator and three Spatial developers to discuss how Markoya’s innovative 3D art was transformed into a virtual art gallery. The conversation was recorded as a podcast. |

Applications resembling the popular Pokémon GO AR game are evolving and essentially allowing everything in the real world to become a computing interface. Holoride, for instance, is turning vehicles into moving theme parks by combining navigational and car data with XR. Holoride is creating elastic content, which is an adaptive function and nonlinear characteristic that lets passengers enjoy constantly changing experiences that adjust to their vehicle’s movement. Their vehicle localization software syncs content with the vehicle’s motion, position, and map data in real time to create a new level of immersion where you will feel what you are actually seeing.

Digital beings, sometimes referred to as virtual humans, have been redirecting phone calls for many years. However, society will start to see 3D volumetric scans rendering digital beings that will be so realistic that, at a glance, humans will be unable to tell if they are indeed real or not. That last sentence brings to mind the replicants in Blade Runner and sounds terrifying, but working with digital beings in the future will be similar to how humans work with robots today; machines and humans will advance into a symbiotic relationship. When machines do redundant tasks that humans do not like to do, it will provide more quality time for humans to focus on being human and on being creative and more innovative. Inside virtual spaces, expect to interact with digital beings that will be trained by AI to help users learn new functionality built into an XR system, aid with navigating the metaverse, entertain, educate, and simply be there for people.

During the COVID-19 pandemic, more people started working and learning from home. As a result, society became more time and location agnostic while using collaborative services within XR applications that closely resemble real-world working spaces. There are tons of possibilities for our future. Librarians will continue to play a role in this evolution by acting, again, as a portal to and learning hub for the metaverse and all that it encompasses. There are questions that remain unanswered. For example, what will be the librarian’s role in acquiring, cataloging, and making 3D assets and other objects findable and usable for their patrons in the metaverse? Will libraries create digital twins of their physical buildings and offer ways for their patrons to access those spaces like they would their actual library? How will utility patents work inside the metaverse? If, for instance, someone creates a lamp and gets it patented, will that patent be the same inside the metaverse, or will the creator need to request another? Will society see an automated copyright enforcement system in the metaverse like YouTube and other content systems have? If so, how will it work? These questions and a multitude of others will likely be presented, and they do not need to be answered at this moment. But being cognizant of what is on the emerging technologies horizon is vital, and we can all rest assured that forward-thinking librarians working in innovative library systems will be willing and able to help get their patrons the information they need in this new world of ours.

|